Authors: Mikael Manngård (Novia UAS)

#VirtualSeaTrial

AI Helper – Hackathon March 2024

Theme: Create an AI-helper Avatar

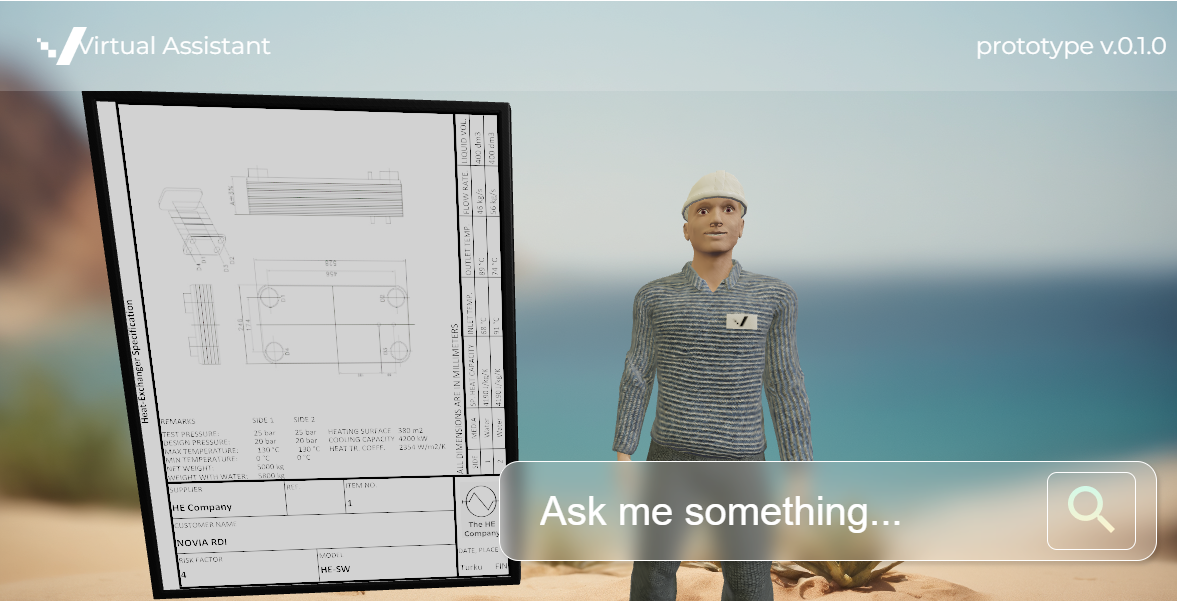

It’s a spring morning in March, and the Virtual Sea Trial project is hosting its first hackathon of the year. The team is challenged to make an AI-powered avatar to retrieve information from engineering documents. With just eight hours on the clock, the team is worried about whether we can get everything done in time. Everything turned out well in the end. Only minor bug fixes remained unsolved after the event. The outcome is a web application showcased in the demonstration below.

![]()

The goal of the virtual assistant is to showcase that test and (simulation) model creation can be automated with the help of AI. Engineering PDF specifications are generally unstructured documents, and manually searching for specific information such as specific parameter values and dimensions is tedious, boring, and time-consuming. Hence, automating this process has the potential to save lots of valuable time for design engineers and simulation experts. The proposed solution is to let an AI-based tool convert the information in the PDF to a structured and searchable format.

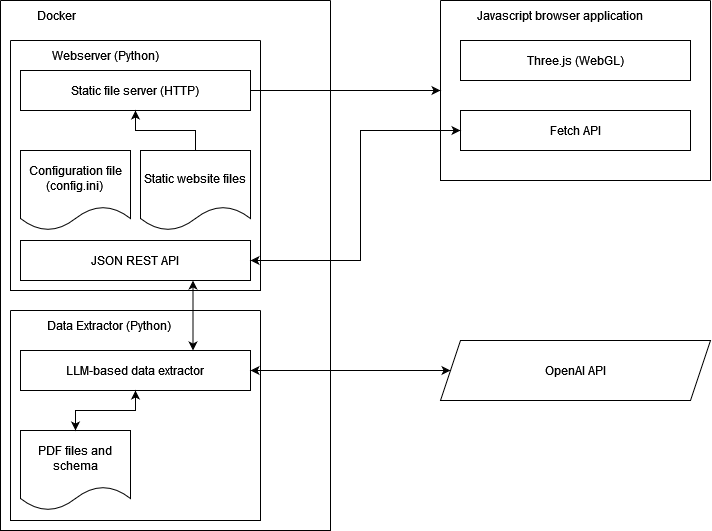

How does it work? The demo you see above is a web application running in Hugging Face Spaces. The project contains a Python backend with an LLM server and a frontend browser application. The communication between backend and frontend happens over Socket.IO. The application architecture is summarized below.

Fig. Frontend and backend architecture of the web application.

The backend LLM server performs two functions:

- It converts the information in a pdf into a structured JSON format that is easily searchable,

- It answers a user defined question by searching the JSON to ground its response on information originating from the pdf.

Currently, it utilizes GPT-4-vision for the first function and GPT3.5 for the second. The whole functionality is collected in a Python package that can be installed via pip. Check out the documentation in the git repository for a more detailed description.

Prototypes of many of the AI functionalities were developed before the hackathon. Thus, as Christian points out, the backend development consisted mainly on refactoring and documenting the AI code.

Prior to the hackathon, our team already developed the core AI functionalities in an experimental setting. For the scope of the hackathon, my colleague (Christoffer Björkskog) and I teamed up to bundle the AI functions into a python package and create API Endpoints.

Project Researcher, Christian Möller

The frontend is a web application using HTML, CSS and JavaScript making use of the Three.js library. The 3D models and animations had mostly been prepared beforehand. Work during the hackathon consisted of migrating everything to the web application and finalizing the UI layout.

We also set up the scene. That is, we positioned the models, the camera, and the text fields to match the overall in the latest mockups.

Project Researcher, Jussi Haapasaari

A pair of XR-applications were developed alongside the web application. The aim was to create solutions for showcasing the possibilities of XR, and an AI-assisted tool for data retrieval is a great way to demonstrate what XR-tools developed during the VST-project could look like. For this hackathon, the goal was to bring the 3D models and animation used for the web application into the Unity3D environment and create an example of an AR-, as well as a VR-application. The target devices were Meta Quest 3 and Meta Quest Pro. For VR, the OpenXR toolkit was used, and in the case of AR, the Meta MR Utility Kit was employed. These demo solutions will act as a basis for further development. Below is a screenshot from the AR-solution.

Fig. XR Avatar viewed from a Meta Quest headset.

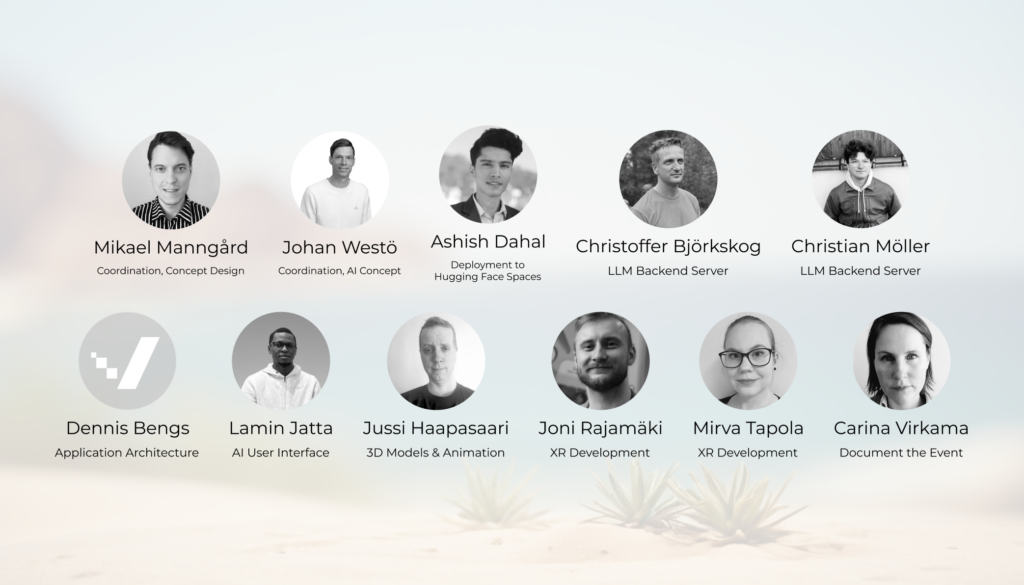

The team participating in the hackathon: